Jakhongir Saydaliev

I research large language and vision models as an MSc student at EPFL. I’m fortunate to have worked at the NLP, DHLAB, LINX labs and also at SwissAI and Logitech. I have done my Bachelor’s at Politecnico di Torino.

Research Interests

I research building inclusive, multimodal reasoning AI systems that work for everyone. Below are some areas I’ve been working on:

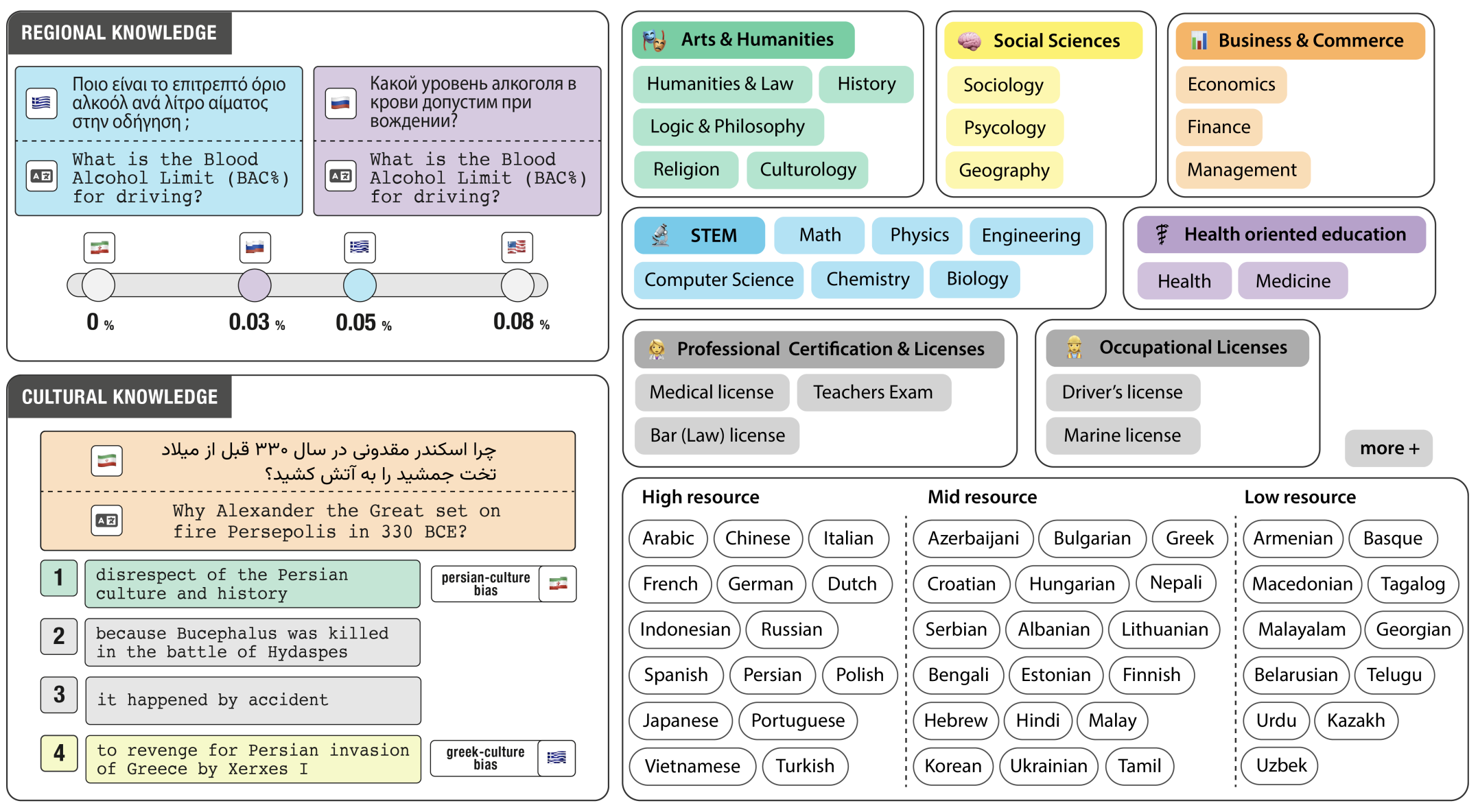

- Multilingual NLP: I want to bridge the gaps in multilingual NLP & ensure AI benefits linguistically diverse and underrepresented communities

- Multimodal Reasoning: Models need to reason across modalities, not just text, to handle real-world scenarios

- Multimodal reasoning: Bounding boxes based multimodal reasoning

- Tool-augmented visual reasoning: Multi-turn VLMs training with RL

- GUI agents: Building autonomous agents for mouse/keyboard operations (ongoing)

- Efficient Reasoning: As we scale to multimodal scenarios, we need computationally efficient reasoning to make deployment practical

- Investigating the “overthinking” phenomenon in LLMs (ongoing)

Starting from Fall 2026, I am seeking a PhD position; a brief overview of my proposed work is available in this research proposal video.

News

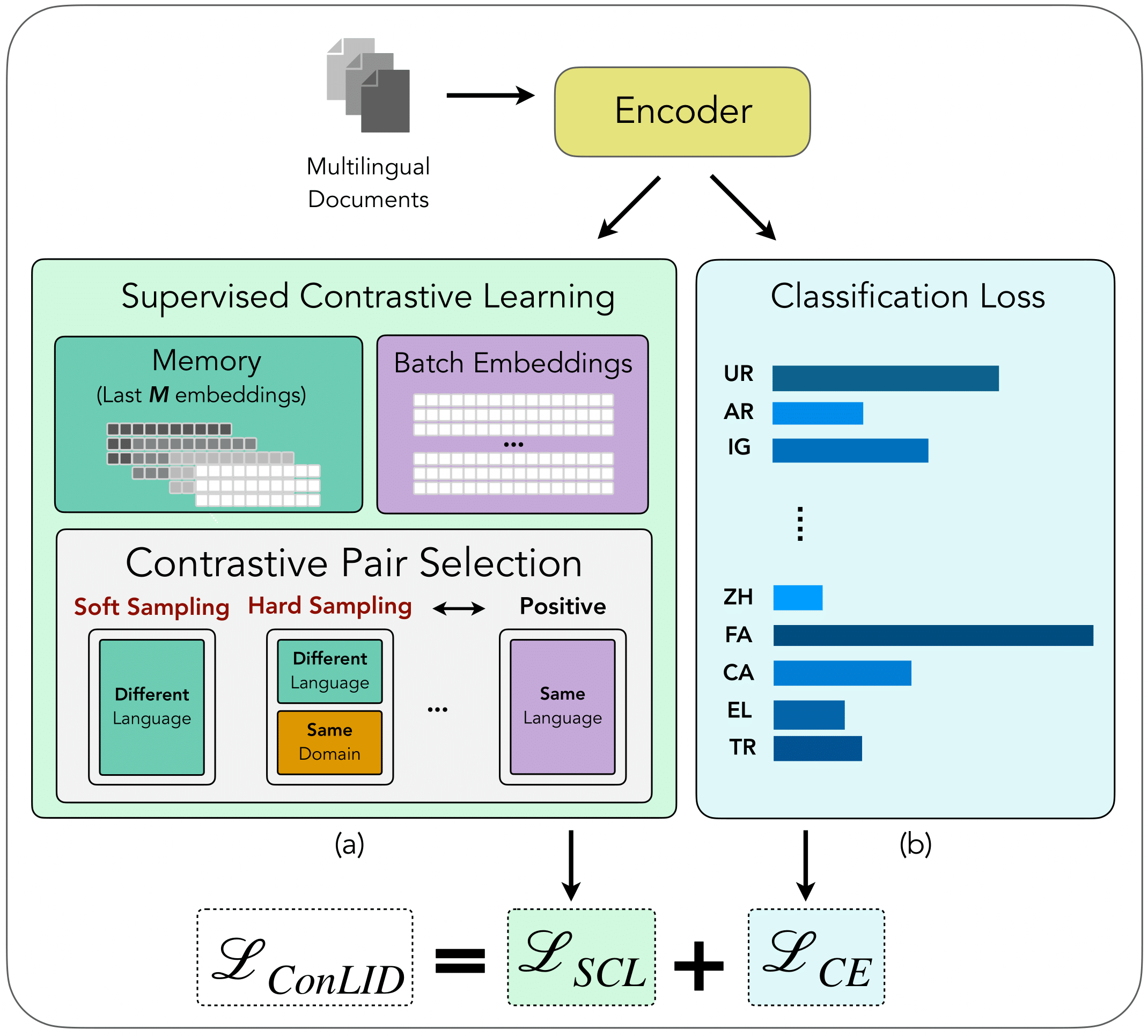

| Jan 2026 | Our ConLID paper got accepted to EACL |

|---|---|

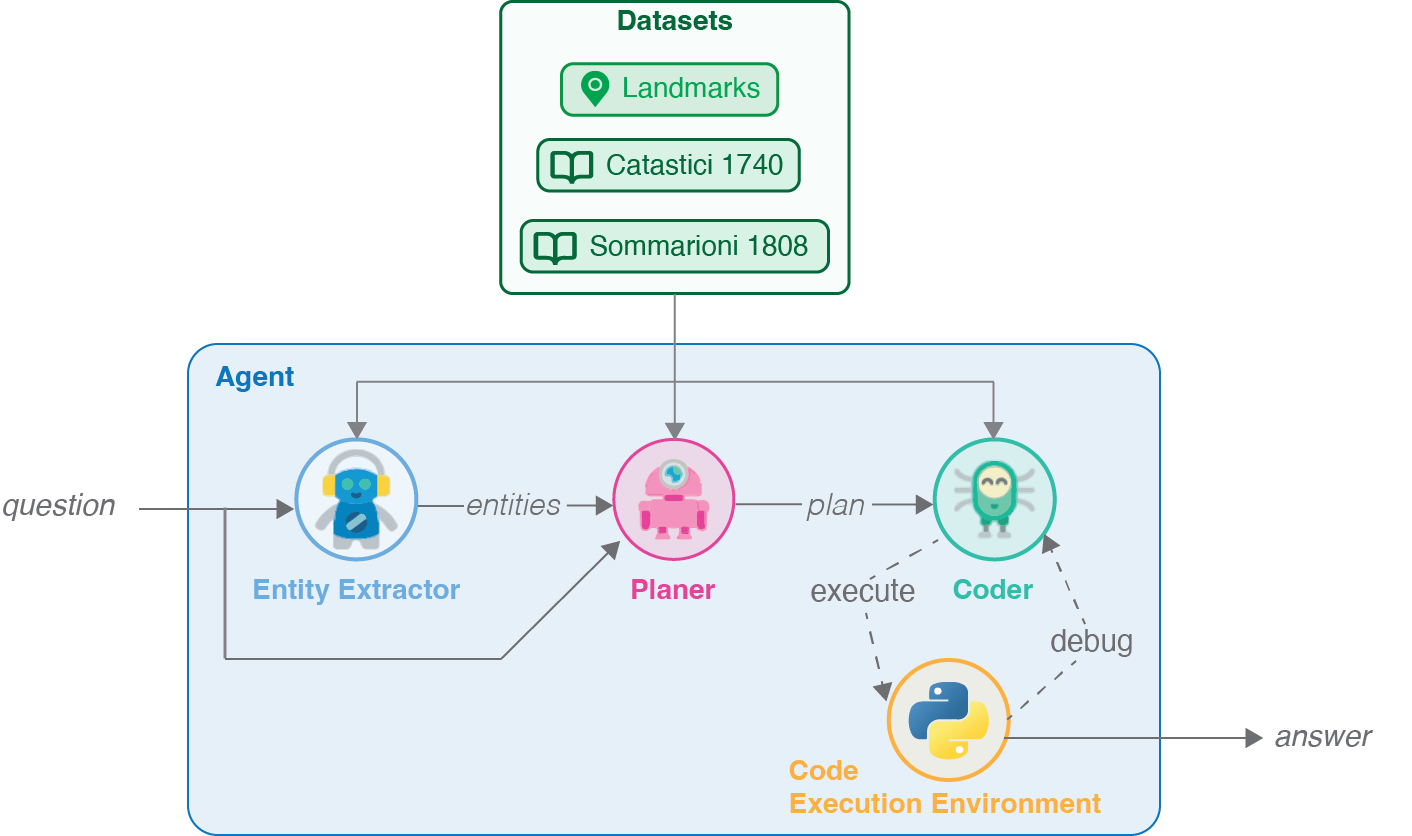

| Oct 2025 | Our paper got published at Computational Humanities Research |

| Sep 2025 | Joined Logitech as an ML Research Intern to work on Computer Use Agents |