Document Retrieval

Built an efficient IR system across 7 languages with computational limits

When you need to search through 200,000+ documents across multiple languages-fast-the usual tricks might not work. Pre-trained language models deliver impressive results, but they’re computationally expensive. Our task: build a multilingual information retrieval system that’s both effective and resource-efficient.

The setup was straightforward: retrieve the single most relevant document for each query from a corpus spanning 7 languages (Arabic, German, English, Spanish, French, Italian, and Korean). But here’s the catch – inference had to complete within 10 minutes, and we were limited to a Kaggle notebook’s resources.

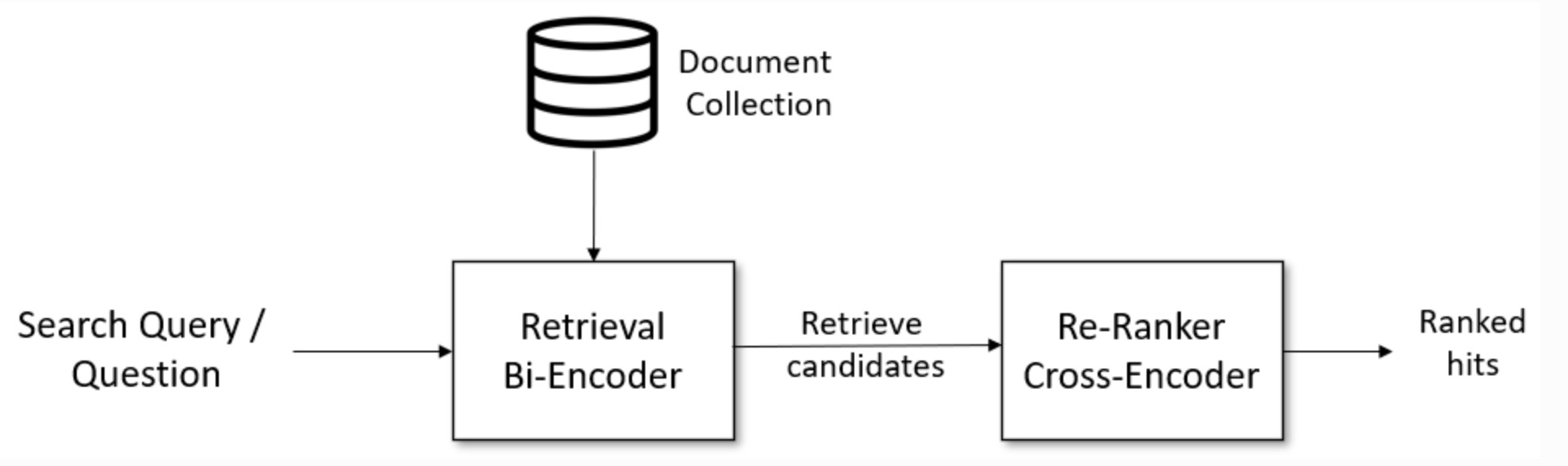

Our Approach

We tested three categories of methods, ranging from classical to modern:

TF-IDF

We started with TF-IDF, a semantic method that weighs terms by their frequency and uniqueness. The baseline scored 0.52 on our test metric (Recall@10), but we improved it by boosting high-IDF terms—since rare terms often indicate query-specific documents. Using a scalar multiplier on the top 2 IDF terms increased performance to 0.5871, a 10% boost.

BM25: The Winner

BM25 refined the TF-IDF approach by accounting for document length and term saturation effects. It’s a simple formula, but it works remarkably well:

The results were interesting: BM25 achieved 0.7714 on the dev set and 0.7562 on the final test submission. No complex models needed.

Text Embeddings

We tested multilingual-e5-small, a compact embedding model with under 250M parameters. We tried two chunking strategies (20-word and 200-word sequences) to handle the attention mechanism’s length limitations. The results were disappointing: e5 with 200-word chunks scored only 0.5414, and shorter chunks performed even worse at 0.3028. Longer chunks provided better context but created language imbalances.

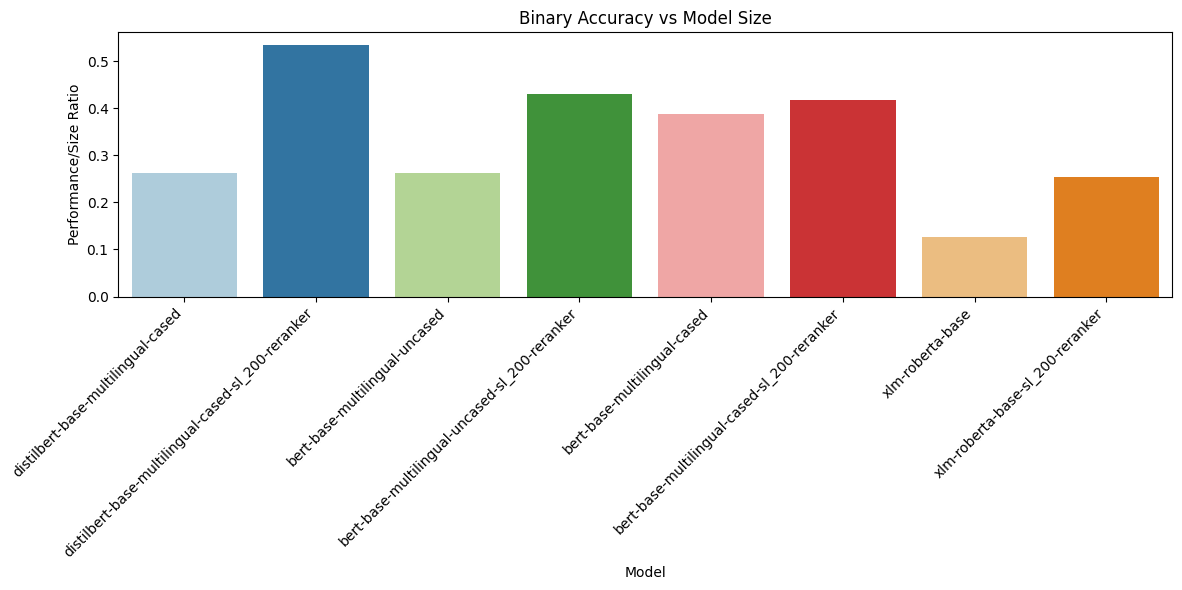

Reranking: The Diminishing Returns

We fine-tuned DistilBERT as a reranker to refine retrieved results. Unfortunately, adding reranking degraded performance for most models—likely due to the small model size. It slightly helped text embedding methods but hurt the strong keyword-based methods. For our final submission, we stuck with pure BM25.

The Results

Below are the results of fine-tuned models:

Here is the comparison of all methods for each language.

| ar | de | en | es | fr | it | ko | |

|---|---|---|---|---|---|---|---|

| TF-IDF (Baseline) | 0.460 | 0.450 | 0.495 | 0.760 | 0.715 | 0.595 | 0.465 |

| TF-IDF (Optimal) | 0.585 | 0.560 | 0.450 | 0.775 | 0.790 | 0.610 | 0.605 |

| e5_sl200 | 0.420 | 0.425 | 0.520 | 0.685 | 0.670 | 0.555 | 0.515 |

| BM25 | 0.735 | 0.665 | 0.745 | 0.910 | 0.910 | 0.770 | 0.625 |

| TF-IDF + Rerank (Baseline) | 0.460 | 0.470 | 0.490 | 0.755 | 0.725 | 0.610 | 0.460 |

| TF-IDF + Rerank (Optimal) | 0.580 | 0.555 | 0.450 | 0.775 | 0.790 | 0.605 | 0.600 |

| e5_sl200 + Rerank | 0.440 | 0.435 | 0.525 | 0.675 | 0.685 | 0.565 | 0.520 |

| BM25 + Rerank | 0.725 | 0.660 | 0.735 | 0.895 | 0.895 | 0.765 | 0.620 |

BM25 dominated across all 7 languages, with particularly strong performance in Spanish (0.91) and French (0.91). Even on less-resourced languages like Korean, it achieved 0.625.

Takeaways

In constrained environments, classical information retrieval methods like BM25 outperform small embedding models because they’re efficient, interpretable, and surprisingly effective at keyword matching. Text embeddings excel at semantic understanding, but they’re overkill when you don’t have the computational resources to properly leverage them.