Visual Reasoning

Explored GRPO to enhance visual question answering in vision-language models

We investigate how Group Relative Policy Optimization (GRPO), a reinforcement learning technique, can enhance visual reasoning capabilities in vision-language models (VLMs). Our study examines five key dimensions: the alignment between reasoning chains and final answers, grounding reasoning with bounding boxes, generalization from synthetic to real-world data, bias mitigation, and prompt-based reasoning induction. Our findings show that GRPO improves performance and generalization on out-of-domain datasets when structured rewards are used, but reasoning alignment remains imperfect and prompt tuning proves challenging. These results highlight both the potential and current limitations of reinforcement learning for advancing visual reasoning in VLMs.

Methods

Our work approach tackles five distinct research questions through targeted experiments:

Reasoning-Answer Alignment. We quantified misalignment between model reasoning and final answers using an LLM-as-judge protocol with GPT-4 mini, assessing whether reasoning traces logically support predicted answers.

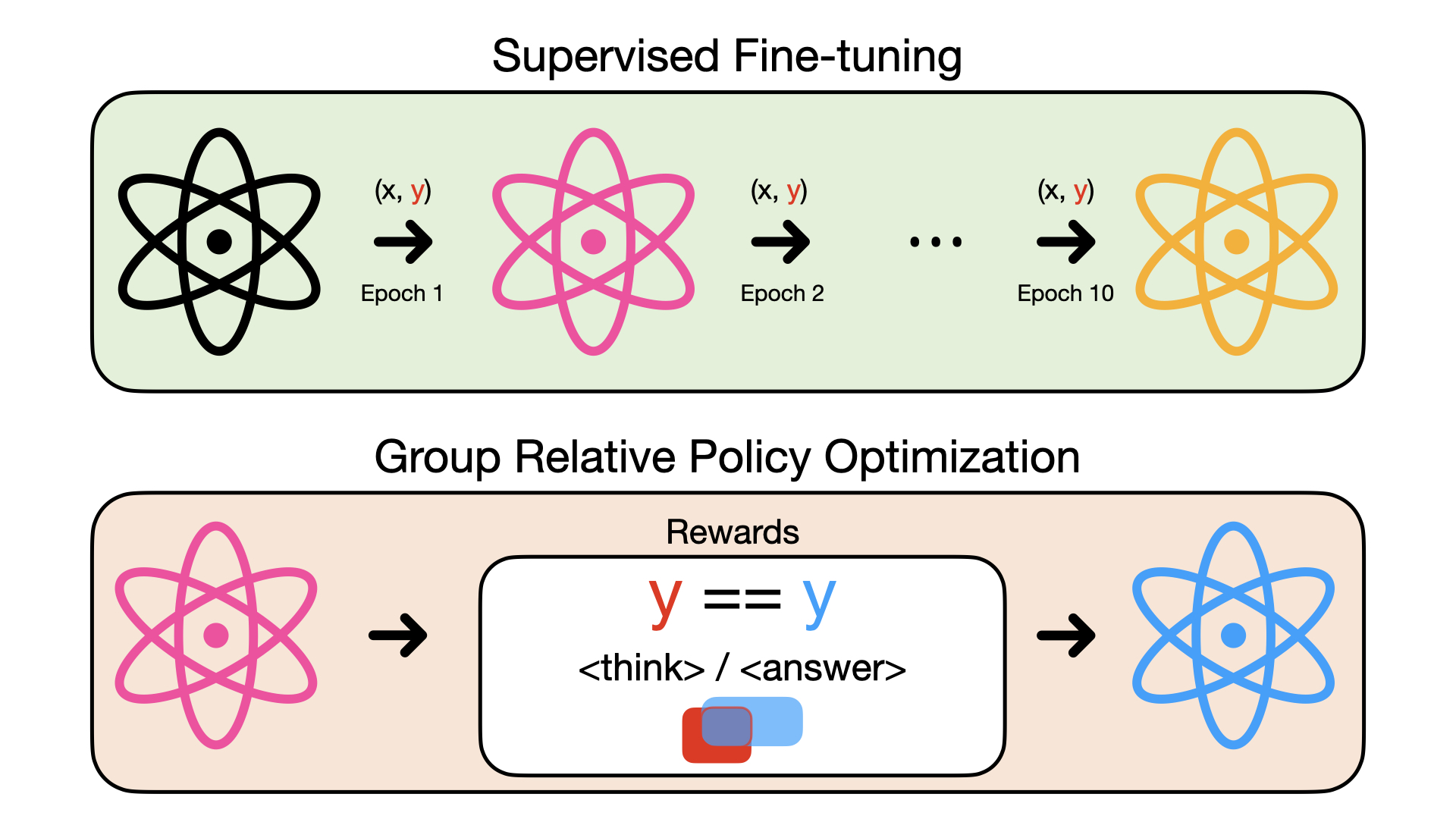

Grounded Reasoning. As illustrated in the methodology overview below, we employed a two-stage training approach: first, supervised fine-tuning (SFT) to teach the model to generate bounding boxes within reasoning chains, followed by GRPO$^{[1]}$ training with three reward functions (accuracy, format consistency, and IoU scores).

Synthetic-to-Real Generalization. We trained models on the synthetic Rel3D dataset and evaluated on both Rel3D and the real-world SpatialSense dataset to assess transfer learning capabilities. We also experimented with augmented inputs including depth images and bounding boxes.

Bias Mitigation. We created increasingly biased variants of the Visual Spatial Reasoning (VSR) dataset through targeted undersampling and trained models using both SFT and GRPO to measure their robustness to spurious correlations.

Prompt Engineering. We applied soft prompt tuning using the PEFT library, optimizing learnable token prefixes while keeping base model weights frozen, comparing answer-only and reasoning-chain fine-tuning strategies.

Results

Reasoning-Answer Alignment

Our alignment analysis reveals a concerning trade-off: while GRPO improves task accuracy, it paradoxically decreases reasoning-answer alignment.

| Model | Reasoning | Accuracy | Alignment |

|---|---|---|---|

| Qwen-Instruct | ✓ | 70.59% | 88.29% |

| Qwen-SFT | ✗ | 84.12% | — |

| Qwen-GRPO | ✓ | 86.47% | 82.86% |

GRPO training achieves higher accuracy (86.47% vs 84.12%) but reduces alignment by approximately 6%, suggesting that detailed reasoning traces may reflect pattern-matching rather than genuine logical inference.

Grounded Reasoning Performance

Our two-stage grounding approach demonstrates strong improvements, particularly on out-of-domain data:

| Methods | Accuracy Reward | Format Reward | IoU Reward | DrivingVQA (OOD) | A-OKVQA (In-Domain) |

|---|---|---|---|---|---|

| SFT-1 | — | — | — | 54.47 | 88.03 |

| SFT-10 | — | — | — | 51.91 | 85.36 |

| GRPO | ✓ | ✓ | ✗ | 57.89 | 88.56 |

| GRPO | ✓ | ✗ | ✓ | 61.31 | 88.3 |

| GRPO | ✓ | ✓ | ✓ | 61.31 | 88.3 |

The most significant gains appear on the out-of-domain DrivingVQA dataset, where GRPO with IoU rewards achieves a 12% improvement over the SFT baseline. The bounding box-based reward proves particularly valuable for generalization.

Synthetic-to-Real Generalization

Training on synthetic Rel3D data did not transfer effectively to real-world tasks:

| Methods | Training Data | Augmented | Rel3D | SpatialSense |

|---|---|---|---|---|

| SFT-2 | Rel3D | ✗ | 53.6% | 50.8% |

| SFT-50 | Rel3D | ✗ | 55.4% | 46.8% |

| GRPO | Rel3D | ✗ | 50.9% | 48.2% |

| GRPO-AUG | Rel3D | ✓ | 48.3% | — |

| SFT-SS | SpatialSense | ✗ | 37.7% | 76.5% |

Surprisingly, GRPO underperformed SFT on this task, with performance near random chance (50%). Adding depth images and bounding boxes as augmented modalities provided no benefit. The stark difference between performance on SpatialSense (76.5%) versus Rel3D (37.7%) when trained on the respective datasets suggests a substantial domain gap between synthetic and real imagery.

Bias Mitigation

VLMs demonstrated unexpected robustness to dataset-induced bias:

| Train Data | Qwen-SFT | Qwen-GRPO |

|---|---|---|

| VSR | 82.0 | 84.8 |

| Biased VSR | 84.6 | 82.3 |

| Strongly Biased VSR | 79.9 | 80.7 |

Even when introducing extreme textual bias (achieving 100% accuracy on a text-only classifier), model performance remained largely stable. GRPO provided no significant advantage over SFT in mitigating bias. These results suggest that VLMs’ pre-training and instruction tuning make them inherently robust to spurious correlations.

Prompt Engineering

Soft prompt tuning proved ineffective for inducing reasoning behavior. With only 5 soft prompt tokens and 4 training epochs, the model failed to follow required output formats (0% accuracy under strict evaluation). While training loss decreased significantly when learning from GRPO-generated reasoning traces, no accuracy improvements materialized at test time, likely because the GRPO-generated traces themselves exhibit poor reasoning-answer alignment.

References

- GRPO: Zhihong Shao and Peiyi Wang and Qihao Zhu and Runxin Xu and Junxiao Song and Xiao Bi and Haowei Zhang and Mingchuan Zhang and Y. K. Li and Y. Wu and Daya Guo (2024). DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language Models. arXiv preprint arXiv:2402.03300. https://arxiv.org/abs/2402.03300