Segmentation and Classification

Using classic computer vision techniques to segment and extract, and deep learning for the classification

The project is about on segmenting, extracting and classifying coin images using computer vision techniques, involving both segmentation and classification steps. The images contain coins with 3 backgrounds: neutral, noisy interference, and those containing hands.

Methodology

Segmentation: Hough Transforms

Initially, a straightforward thresholding approach was applied to isolate coins from their backgrounds. However, this simple method struggled with the project’s diverse image conditions: neutral backgrounds, noisy interference, and images containing hands. The primary challenges included overlapping backgrounds and inconsistent segmentation across different image types.

To overcome these limitations, we enhanced the pipeline with morphological operations like dilation to refine segment boundaries and merged components. The key breakthrough came with implementing the Hough transform to detect circular coin shapes. This approach proved essential for handling complex cases where coin pieces merged together or adhered to the background, allowing us to reliably extract individual coin regions.

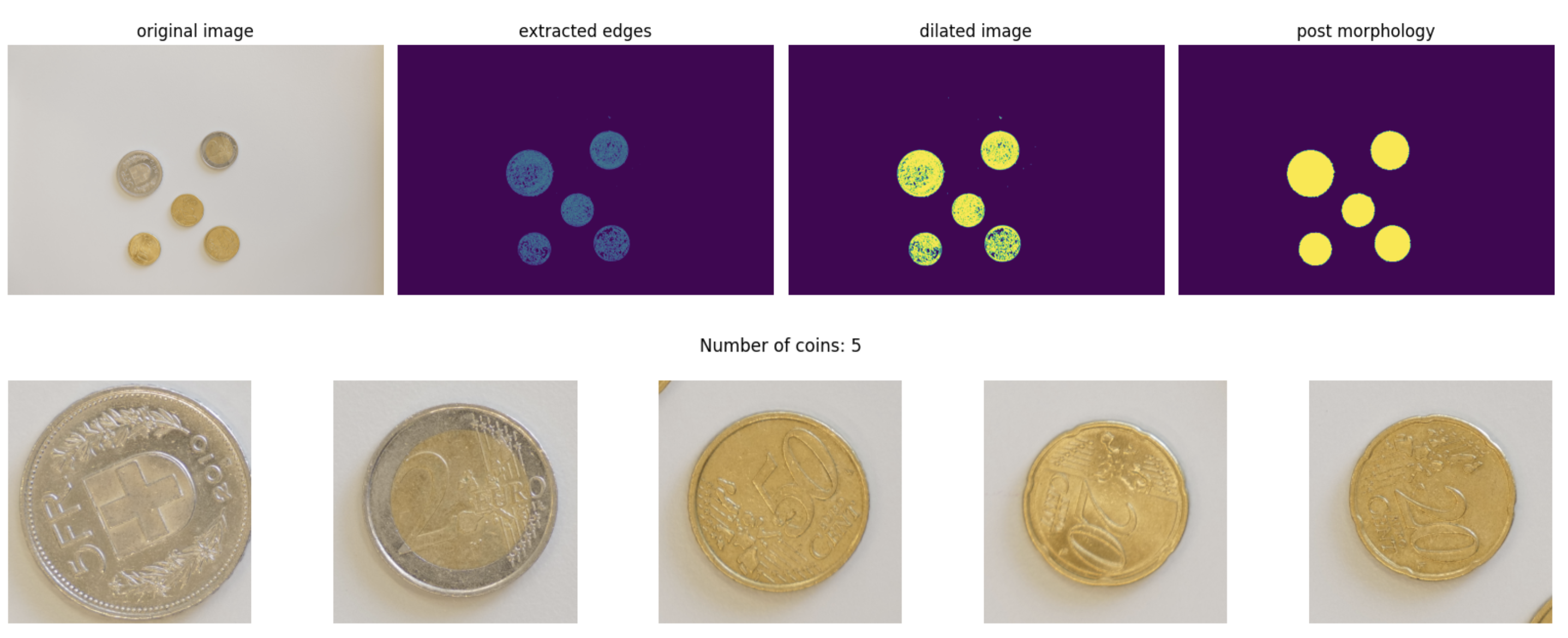

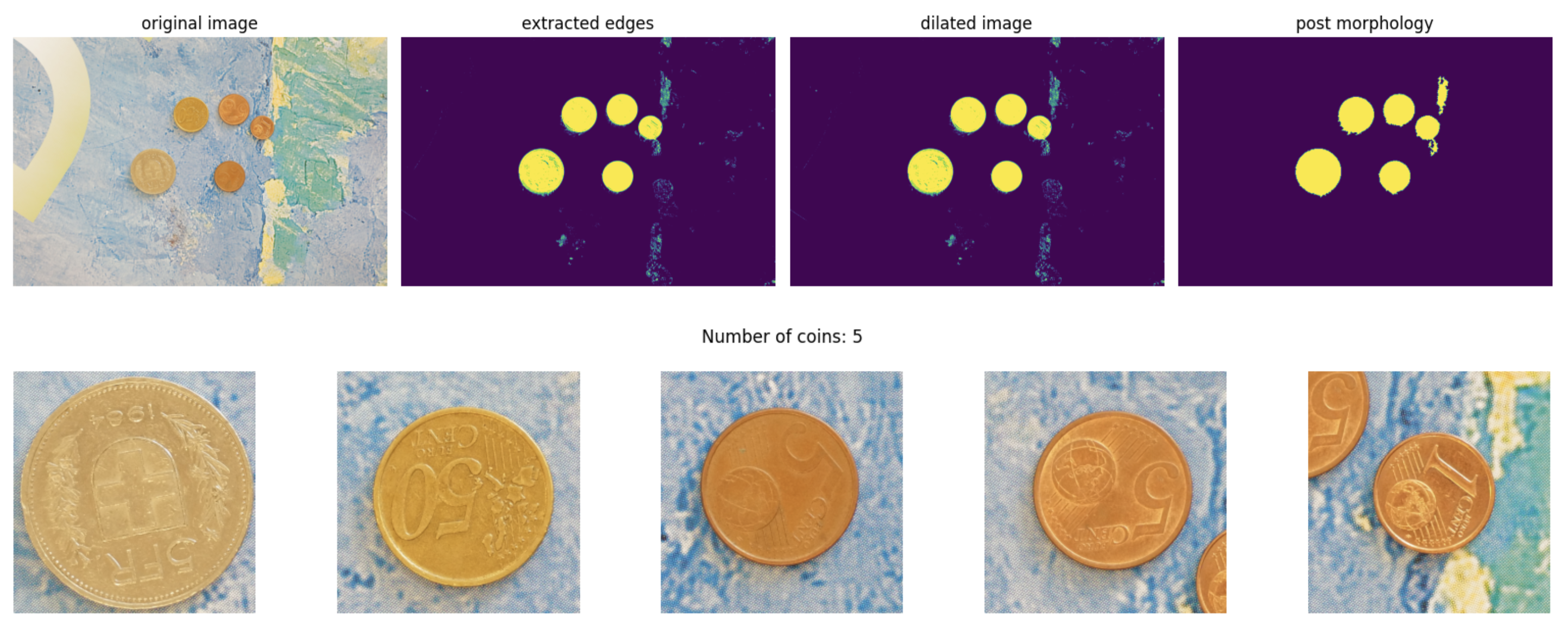

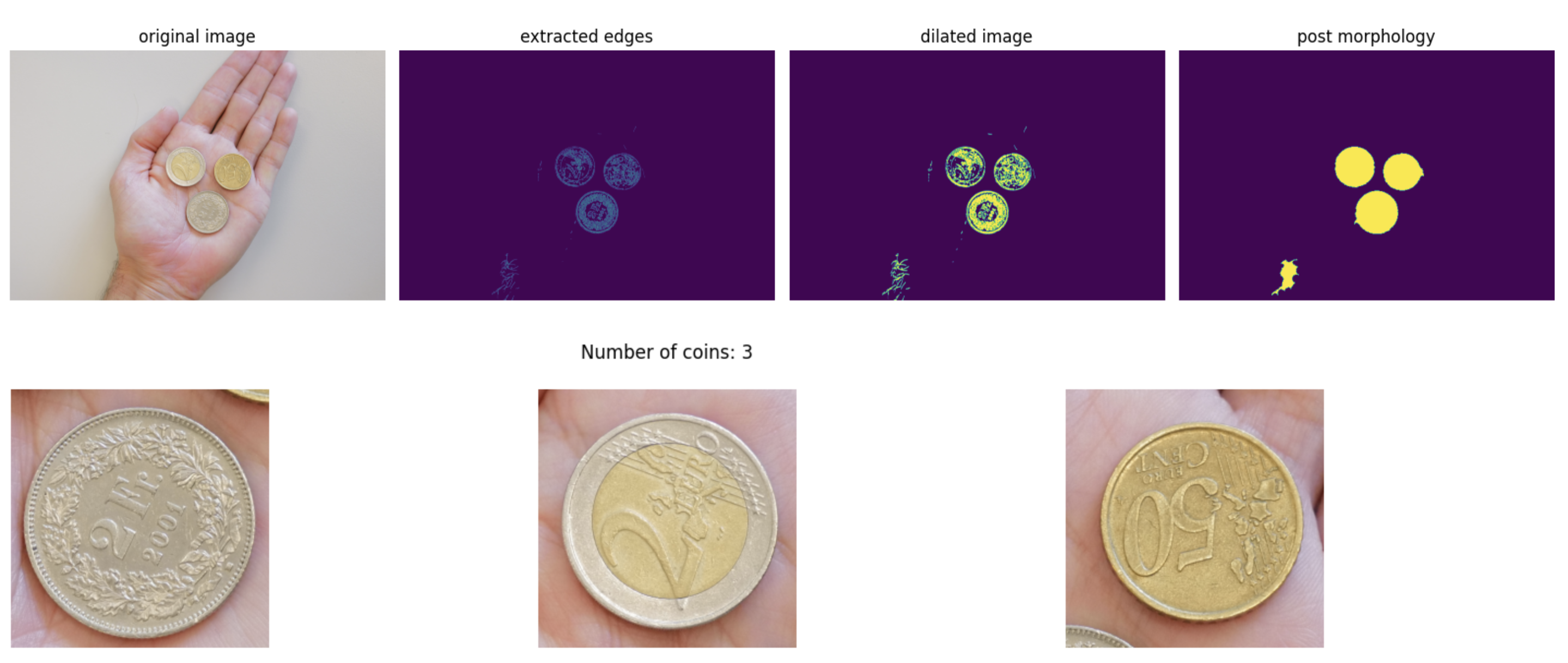

At the end, the segmentation phase consisted of 4 steps:

- Background Detection - Using standard deviation on specific channels to identify image type (Noisy/Neutral/Hand)

- Edge Detection - Using Canny algorithm (for hand/neutral) or thresholding on H and S channels (for noisy), adapted per background type

- Morphological Operations - Dilation and other morphology functions to remove noise, adapted per background type

- Hough Transformation - Detecting coins as circular objects, separating merged pieces and removing background attachments

Some examples of segmentation are given below for each background type:

Classification: ResNet50

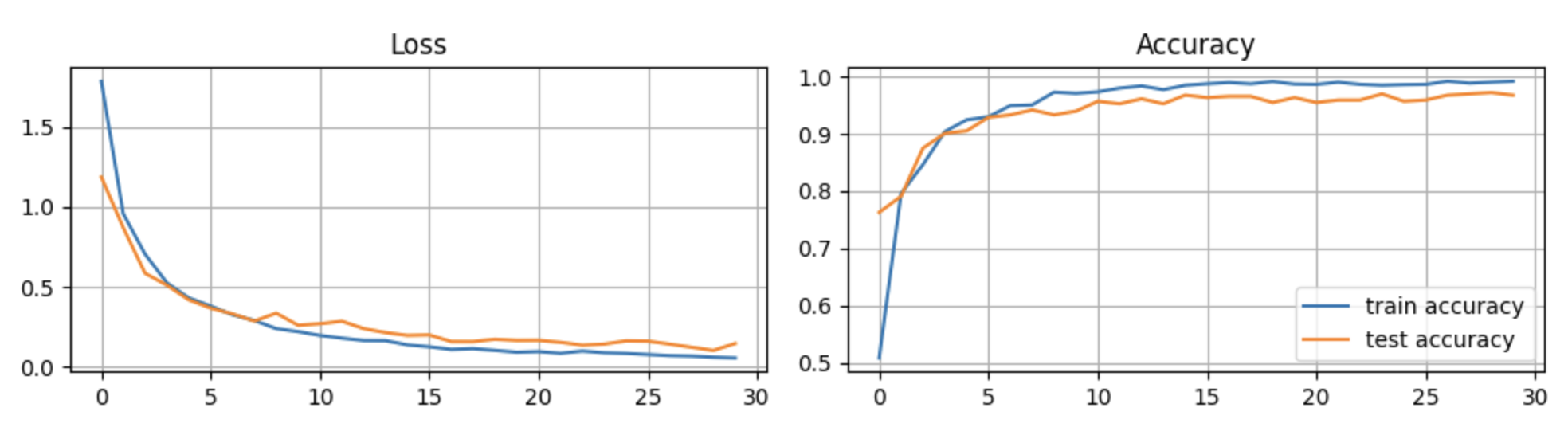

Once coins were successfully segmented, the extracted circular regions were processed for classification. We manually labeled the coins and trained a ResNet50 architecture to learn discriminative features for each coin class. The model was validated using 5-fold cross-validation to ensure robust performance.

The training process showed steady improvement, with both training and test loss converging and accuracy increasing across folds. The loss and accuracy curves demonstrate successful learning without significant overfitting.

Results

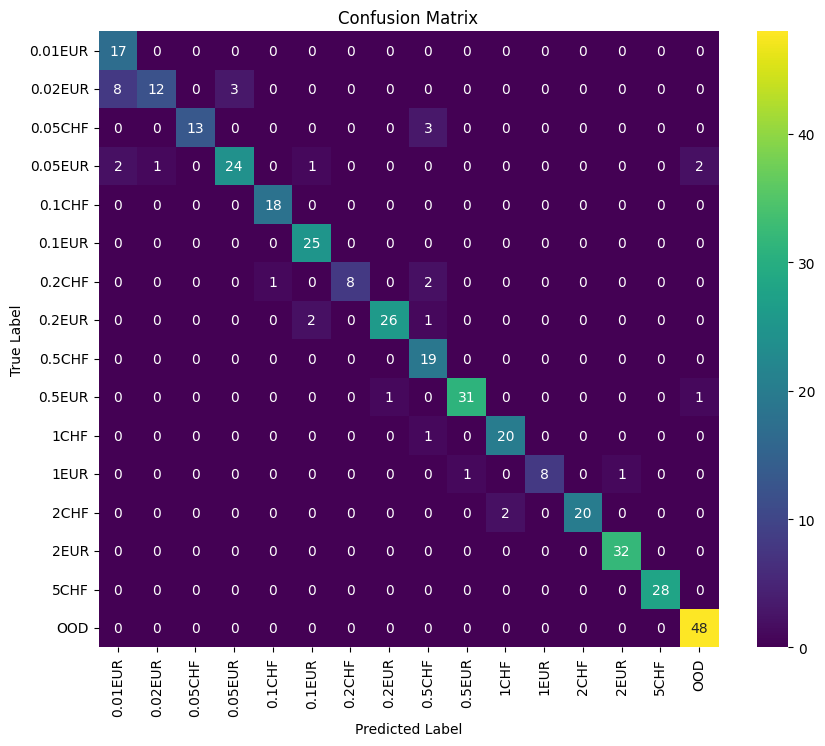

The final model evaluation on held-out test data revealed strong classification performance. Here is the final confucion matrix of the evaluation:.

Here are some prediction examples of the final model: