Stance Detection

Fine-tuning Large Language Models for argument stance detection in unseen domains

Stance detection is the task of determining whether an argument is in favor of, against, or neutral towards a given topic. This has significant applications in social media analysis, misinformation detection, and political discourse understanding. Our work explores how well Large Language Models (LLMs) can be fine-tuned for this task and, importantly, how well they generalize to unseen datasets.

This project is part of CommPass, a larger initiative aimed at creating awareness about media polarity by providing readers with visualizations showing where content sits in the “space” of media events like the Russia-Ukraine war or COVID-19.

Methods

Models and Fine-Tuning Approach

We experimented with three LLMs:

- Mistral-7B - 7 billion parameters with advanced features like Grouped Query Attention

- Llama-2-7B - 7 billion parameters

- Phi-1.5 - A smaller 1.3 billion parameter model trained primarily on textbook data

Rather than fine-tuning all parameters (which would be computationally expensive), we used Low-Rank Adaptation (LoRA$^{[1]}$) - a parameter-efficient technique that inserts trainable rank decomposition matrices into selected layers while freezing the pre-trained weights. This dramatically reduces the number of trainable parameters while maintaining performance.

Datasets

We trained and evaluated on two distinct datasets to test generalization:

-

SemEval2016 - Twitter data focusing on six targets (Abortion, Atheism, Climate Change, Feminist Movement, Hillary Clinton, Donald Trump) with three labels: Favor, Neutral, Against

-

IBM-Debater - Claims and evidence from Wikipedia articles covering 33 controversial topics, with only two labels: PRO and CON

A key difference: SemEval uses short targets (1-2 words) while IBM-Debater uses complete sentences.

Experiments

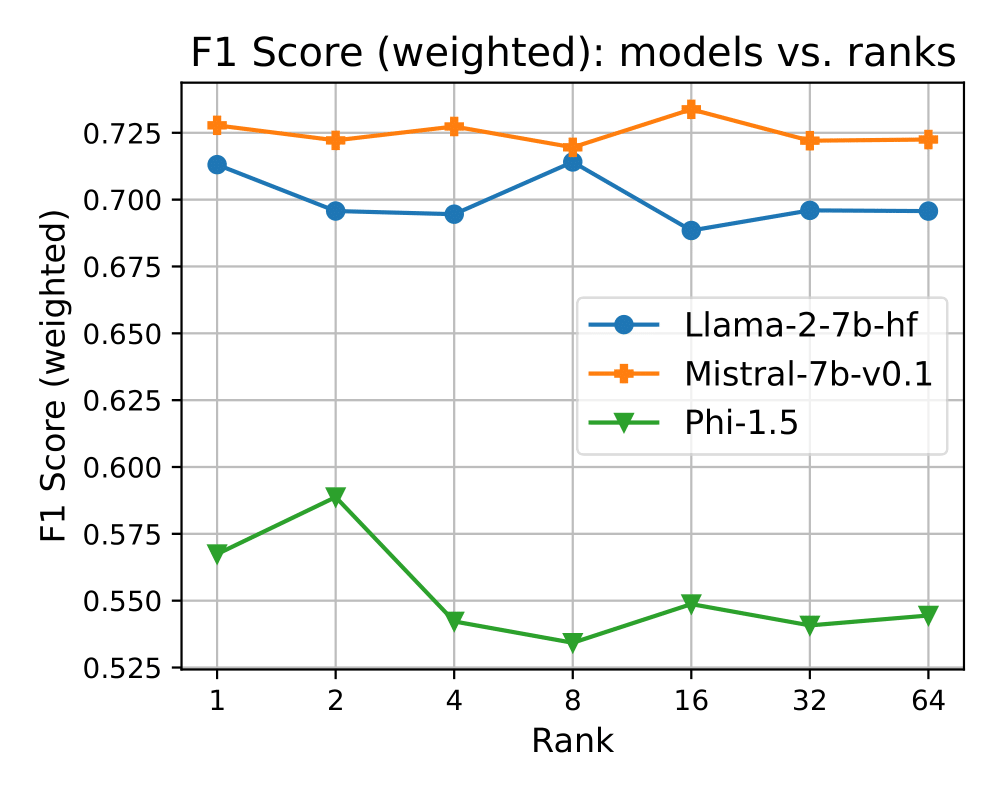

Finding the Right LoRA Rank

We tested LoRA ranks from 1 to 64 on the full SemEval dataset. The results showed that Mistral consistently outperformed Llama and Phi, but interestingly, there was no clear trend with rank size - lower ranks performed just as well as higher ones.

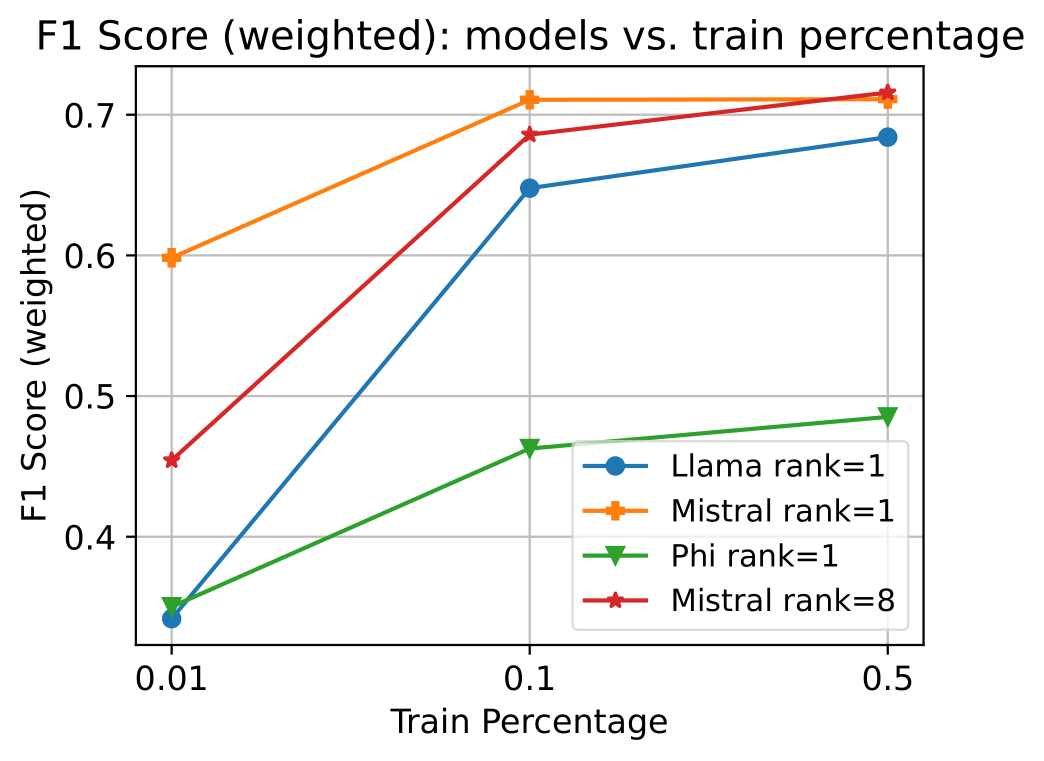

Low-Data Regimes

We tested how well models perform when fine-tuned on limited data (1%, 10%, and 50% of the training set). Mistral again proved superior, especially in low-data scenarios. We found that rank choice depends on data volume - rank 1 works better with less data, while rank 8 improves with more data (likely because higher ranks overfit small datasets).

Main Results

Our best model - Mistral with LoRA rank 16, trained on 70% of both SemEval and IBM-Debater datasets - significantly outperformed all baselines:

Performance Table

| Model | Abortion | Atheism | Climate Change | Feminist Movement | Hillary Clinton | SemEval (avg) | IBM (avg) |

|---|---|---|---|---|---|---|---|

| BERTweet (baseline) | 0.65 | 0.76 | 0.79 | 0.65 | 0.69 | 0.70 | - |

| RoBERTa (baseline) | 0.54 | 0.79 | 0.80 | 0.64 | 0.71 | 0.68 | - |

| StanceBERTa (baseline) | - | - | - | - | - | - | 0.61 |

| Mistral Zero-shot | 0.54 | 0.33 | 0.55 | 0.57 | 0.66 | 0.54 | 0.44 |

| Mistral Fine-tuned (Ours) | 0.71 | 0.73 | 0.84 | 0.76 | 0.80 | 0.76 | 0.92 |

Surprising Findings

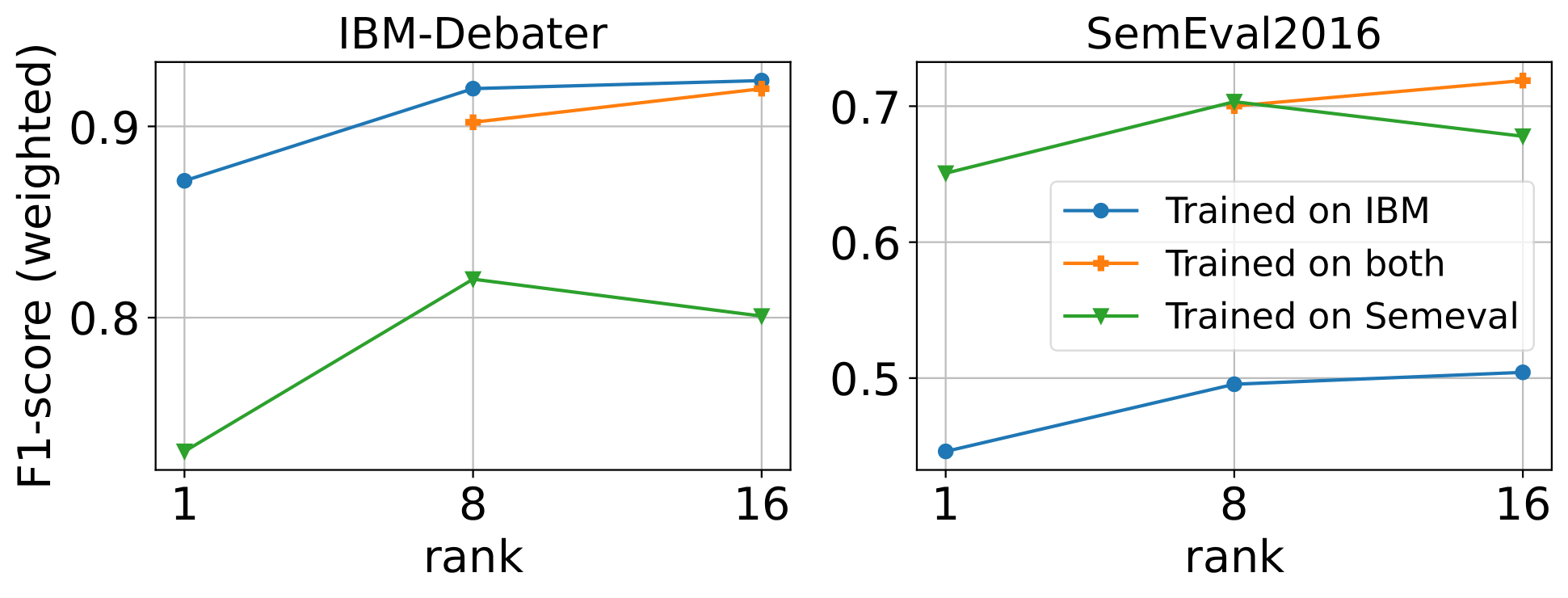

-

Cross-dataset generalization: Models fine-tuned on SemEval alone generalized remarkably well to IBM-Debater, outperforming the baseline despite never seeing that data format during training.

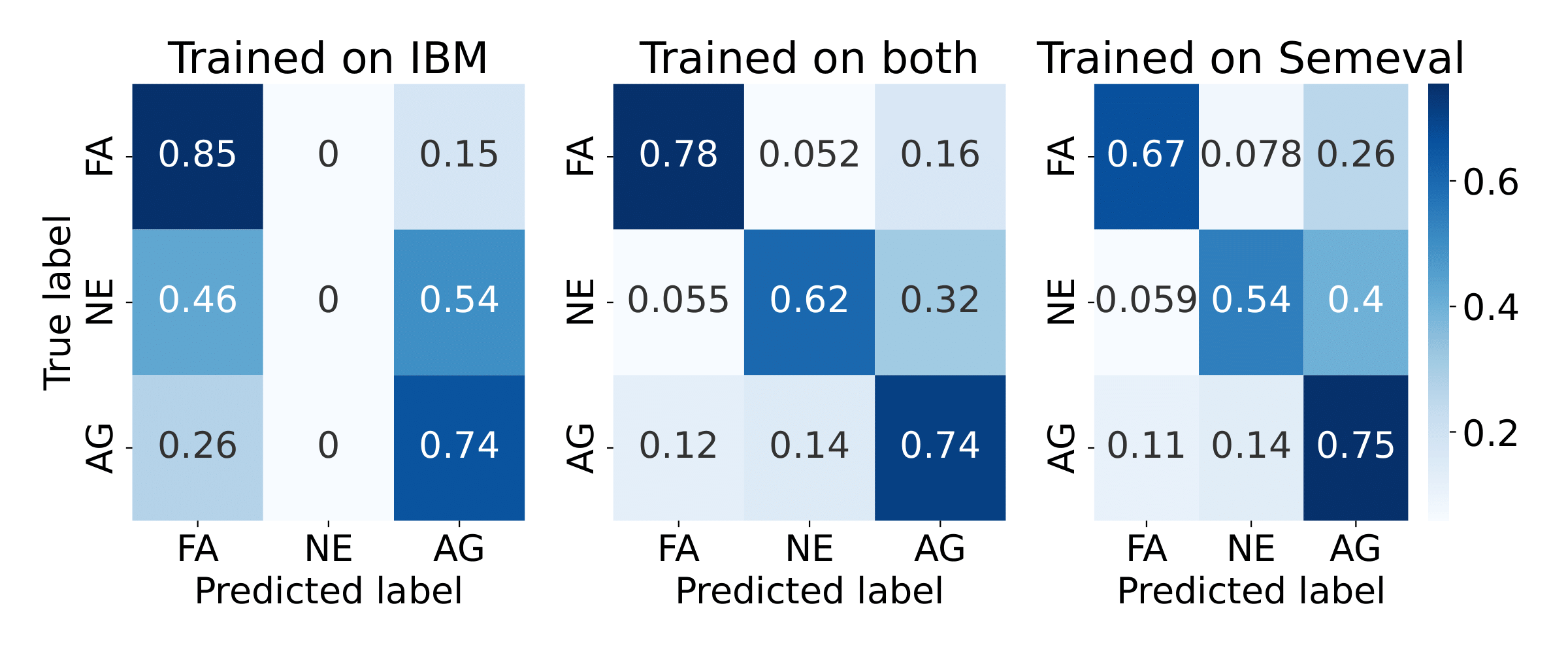

-

Training on both datasets improved neutral class recall on SemEval, even though IBM-Debater has no neutral labels - suggesting the model learned more nuanced representations.

-

Fine-tuning on SemEval and extrapolating to IBM might lead to better results than directly fine-tuning on IBM alone.

References

- LoRA: Edward J. Hu and Yelong Shen and Phillip Wallis and Zeyuan Allen-Zhu and Yuanzhi Li and Shean Wang and Lu Wang and Weizhu Chen (2021). LoRA: Low-Rank Adaptation of Large Language Models. arXiv preprint arXiv:2106.09685. https://arxiv.org/abs/2106.09685